Apple Launches Live Caption

With comparable capabilities to its Android version, Live Captions will be available for iPhone, iPad, and Mac. For deaf users, this means automatically creating on-screen text from any audio content — such as a FaceTime call, a social media app, or streaming content — following along with discussions more easily. Caption display will be rather versatile, allowing viewers to adjust the font size to their liking. FaceTime calls will have a text-to-voice response component, and the system will automatically disseminate transcripts to participants. Apple will not be able to listen in on any of this because Live Captions for iPhones will be generated on-device, exactly like on Android.

Interesting Upgrades from Apple

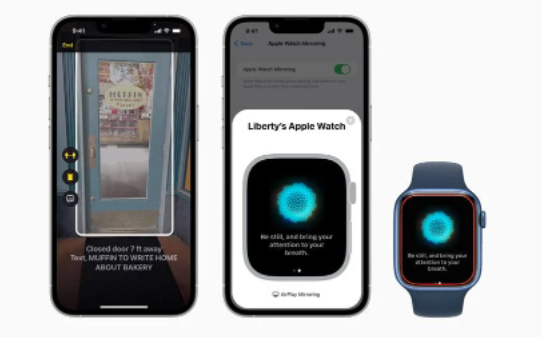

In the meantime, Apple is releasing an intriguing door detection tool for the blind and individuals with low vision. When a user arrives at a new location, Door Detection will utilize the iPhone’s LiDAR scanner, camera, and ML (machine learning) to find a door, determine its distance, and note whether it’s open or closed. Because the feature requires LiDAR, it will most likely be confined to the iPhone Pro 12 and 13 models and select iPad Pros. Apple is demonstrating several other accessibility features, including the ability to read signs and symbols around doors. New wearable capabilities include mirroring, which allows users to operate their Apple Watch using the iPhone’s assistive functions (such as Switch Control and Voice Control). And over 20 new VoiceOver languages, notably Bengali and Catalan. Also read: Meta is Testing End-to-End Encryption For Quest’s VR Messenger App